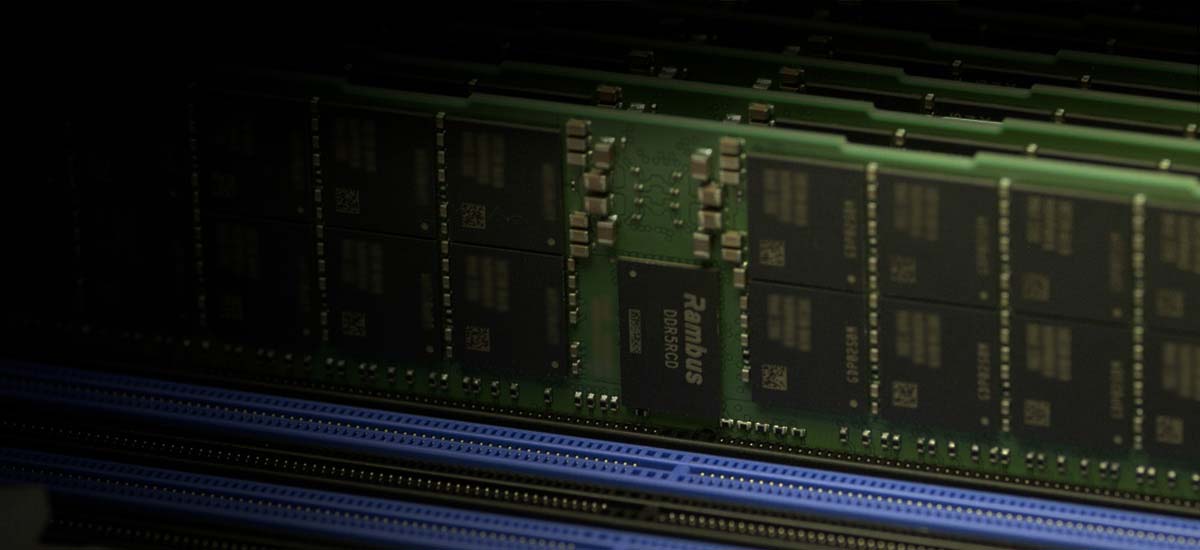

Providing memory bandwidth and capacity to unleash processing power

Industry-leading Chips and Silicon IP Making Data Faster and Safer

Advanced data center workloads exemplified by generative AI grow in their size and sophistication at a lightning pace. Increasing server memory and connectivity bandwidth is key to enabling this rapid development.